INTRODUCTION

The following lab demonstrates the ability to delineate from large satellite imagery and syncing satellite imagery with Google Earth. It also showcases optimization of spatial resolution and radiometric enhancement used for better interpretation. Lastly, the lab demonstrates resampling, image mosaicking, and binary change detection techniques. All techniques used and skills demonstrated were done so using satellite imagery over Eau Claire, WI. The lab is divided into seven parts: Image Subsetting, Image Fusion, Radiometric Enhancement, Synchronizing Google Earth, Resampling, Image Mosaicking, and Binary Change Detection.

METHODOLOGY

IMAGE SUBSETTING

To create a smaller study area (Eau Claire/Chippewa Falls) from a large satellite image (Eau Claire and surrounding counties), an inquire box was drawn and a subset and chip function was performed. Next, to subset an image that is non-rectangular, a delineation of an area of interest (AOI) was executed. A shapefile of the AOI was uploaded over the original satellite image, delineated using the paste from selected object function, and saved as an AOI layer. Again, a subset & chip function was performed.

IMAGE FUSION

To optimize spatial resolution for visual interpretation purposes, a course resolution image was combined with a panchromatic image with a higher spatial resolution. This image fusion retained the reflective image while improving spatial resolution to that of the panchromatic image. This was done by using a resolution merge function in the Pan Sharpen icon under the Raster tab with a multiplicative method and nearest neighbor resampling technique.

RADIOMETRIC ENHANCEMENT

To enhance spectral and radiometric quality for image analysis, a simple haze reduction function was performed. This was done by going to the Raster tab, and finding the haze reduction function under the Radiometric icon. Default parameters were used.

SYNCHRONIZING GOOGLE EARTH

To provide additional information and a current satellite image to aid in image classification, Google Earth was linked to the image viewer in ERDAS. This was done by navigating to the Google Earth tab and connecting and syncing to view.

RESAMPLING

To increase spatial resolution of an image for enhanced clarity and accuracy, resampling up is performed to reduce the size of pixels and increase file size. Two resampling functions were used--one using the nearest neighbor technique and for the other, bi-linear interpolation. Both techniques used the resample pixel size in the Spatial icon under the Raster tab. Pixel size for each was resampled up from 30x30m to 15x15m. The only difference between techniques was choosing nearest neighbor or bi-linear interpolation methods.

IMAGE MOSAICKING

When the AOI is greater than the spatial extent of a single satellite image scene, an image mosaicking is performed to combine multiple satellite images to fully extend over the AOI. Image mosaicking was performed twice, first using Mosiac Express and then Mosiac Pro. Both were found in the Mosaic icon under the Raster tab. For Mosiac Express, the multiple images in virtual mosaic icon was selected under the Multiple tab and background transparent and fit to frame was selected under the Raster Options tab. After the two images were uploaded, all default options were used. For Mosaic Pro, after uploading both images, the use histogram matching option was selected in the Color Corrections icon and the overlap areas was used for the matching method shown in the Set icon.

BINARY CHANGE DETECTION

To estimate and map the BVs of pixels that changed in Eau Claire County and neighboring counties, binary change detection was performed. The Two Image Functions was selected from the Functions icon under the Raster tab. Only layer 4 of the two images were analyzed to detect change for simplification. Under the output options section, the Operator was switched to negative. After this function was performed the resulting image's histogram was analyzed to predict the threshold where the areas of change would occur.

In order to map the resulting image, a model was made in Model Maker under the Toolbox tab. Two rasters were created of both images which led to a function and an output raster. The function used was raster 2 subtracted from raster 1 adding the constant of 127 to avoid getting negative BVs.

This constant shifted the histogram positively and sent the change threshold to the upper tail of the histogram. Another calculation was made using 3 standard deviations added to the mean to find a new threshold. Using the current image as a raster, a new model was produced where a conditional function was performed giving a value of 0 to all values under this threshold and a value of 1 for all values above.

The resulting image was then transferred to ArcMap and overlaid by another image of the surrounding area. All areas shown from the ERDAS image produced from the model maker represent BVs that changed from our original images.

RESULTS

IMAGE SUBSETTING

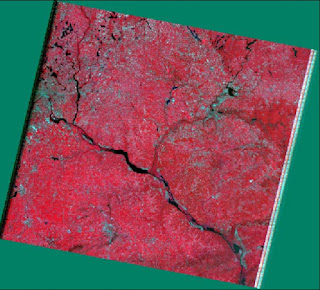

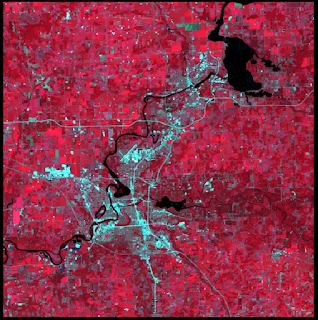

Figure 1 is a subsetted image of Chippewa Falls/Eau Claire area from the original Eau Claire and surrounding county satellite image. This subsetted image is limited to a square area.

|

| Figure 1 |

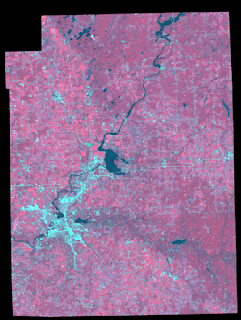

Figure 2 is also a subsetted image of the Chippewa Falls/Eau Claire area from the original Eau Claire and surrounding county satellite image. However, the AOI (area of interest) was delineated by a previously-made shapefile--this method allows for non-rectangular AOIs to be examined.

|

| Figure 2 |

Both the inquire box and AOI methods can be used to subset a larger satellite image and have their advantages. Although the inquire box method might be faster and adequate for less-intensive work, the AOI method allows for specific delineation and takes more effort to create a shapefile to subset.

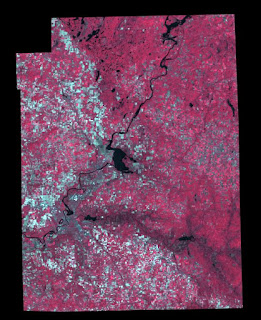

IMAGE FUSION

The subsetted image from Figure 2 was used. However, the 30x30m spatial resolution needed to be upgraded to a 15x15m resolution. The reflective image was fused with a 15x15m panchromatic image to retain the reflective properties of Figure 2's image but increase the spatial resolution to that of the panchromatic image. Results can be seen by Figure 3 and compared to that of Figure 2.

|

| Figure 3 |

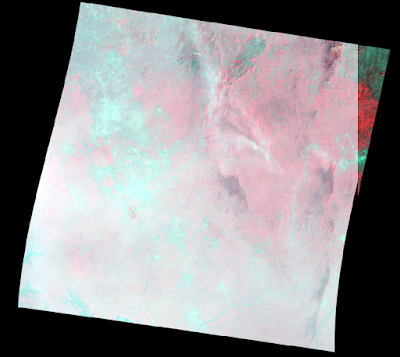

RADIOMETRIC ENHANCEMENT

Figure 4 shows satellite imagery of the Eau Claire County area after a haze reduction function has been performed. In the original satellite imagery collected, a white haze was collected congregating around the lower right portion of the image. After the basic haze reduction, all that is shown is a slight shadow where the originally corrupted data was recorded.

|

| Figure 4 |

SYNCHRONIZING GOOGLE EARTH

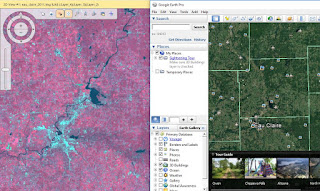

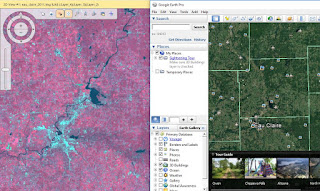

After connecting, linking, and synchronizing Google Earth to Erdas, the screencapture of Figure 5 shows the supplementary information that can be gathered such as labels attached to cities, rivers, and features and a plethora of pictures taken in the area.

|

| Figure 5 |

RESAMPLING

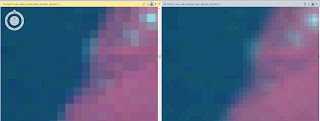

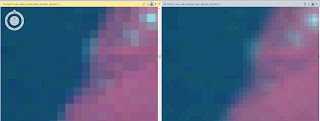

After resampling up the Chippewa Falls/Eau Claire area from 30x30m to 15x15m, the results are shown when zoomed in with the left window representing the nearest neighbor method and the right representing the bi-linear interpolation method.

|

| Figure 6 |

When resampling up, the new pixels created by reducing pixel size need new brightness values (BVs). Using the nearest neighbor method, these new pixels are assigned BVs by taking the BV from the nearest pixel. This is advantageous because it doesn't take much computer processing power and is fairly quick. However, when observed closely there is a stair-stepping effect along boundary lines. In this case the Eau Claire River is seen as a blurrier, less-defined boundary from land. When observing the right window which used bi-linear interpolation, the resulting image has a smoother, more-defined boundary between the river and land. This is because using the bi-linear interpolation method, the new pixels are assigned a new BV using an average of all its neighboring pixels. This provides a more seamless transition from boundaries where there is a great BV difference in pixels.

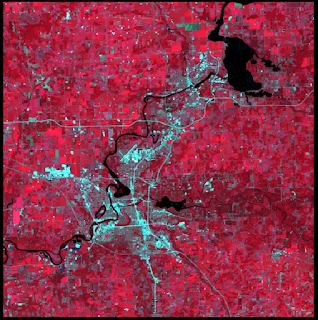

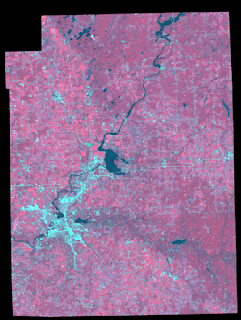

IMAGE MOSAICKING

For Figure 7, the mosaic performed using Mosiac Express is shown as the top image and the Mosiac Pro image is shown on bottom. Using Mosiac Express, the pixels in the overlapped area between images simply use the BVs from the top image. This retains data from the original histogram, but provides a very abrupt transition from the top and bottom images and won't match values to the bottom image's histogram.

|

| Figure 7 |

As shown by the bottom image, the mosaic using Mosaic Pro offers a much smoother transition of BVs between the two images. This takes more computer processing and therefore takes longer. However the results are much better because the pixels of the overlapped area are averaged out between the histograms of the two images and new BVs are assigned to the overlapping pixels using this information.

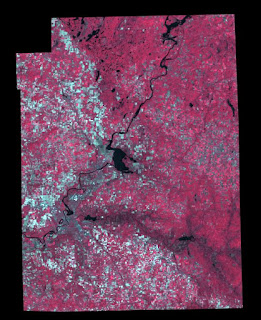

BINARY CHANGE DETECTION

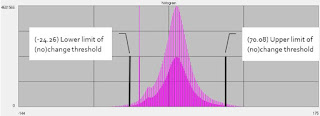

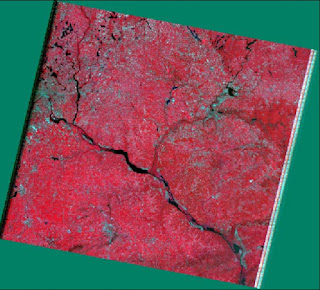

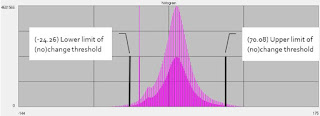

After running the image differencing fuction, the resulting image's histogram is shown by Figure 8. A calculation of 1.5 standard deviations added to the mean of the BVs was performed and gave a predicted positive (70.08) and negative (-24.26) threshold for pixel change in BV on the histogram.

|

| Figure 8 |

Figure 8 shows the ERDAS image after the first function to subtract the 2nd raster from the 1st was run and the second function to adjust for only values that were above the threshold of change or no change was also run.

This image was overlaid upon another image of the same area in ArcMap where all red values represent change from one raster to the other.

SOURCES

Data was obtained from the drive at the University of Wisconsin-Eau Claire at

Q/StudentCoursework/Wilson/GEOG338-001/Lab4

Satellite imagery was obtained from

Earth Resources Observation and Science Center, United States Geological Survey.

The shapefile used for image subsetting was obtained from

Mastering ArcGIS 6th edition Dataset by Maribeth Price, McGraw Hill, 2014.